If you make a mistake deducting CIS tax from a subcontractor, submitting the return to HMRC, and then having to work out how to correct it, this post is for you! (and me, ’cause that’s what just happened and the Xero documentation and initial support response is wrong).

Quick version:

- Void the incorrect invoice (which in turn means first unreconciling any payments made).

- Create a new correct invoice

- Allocate the payments

- Resubmit the return

Long version:

CIS is the Construction Industry Scheme. It’s a tax that is deducted from subcontractors and paid directly to the government. We have it because the building industry had a high number of small contractors that would be paid and then vanish or go out of business never paying the income tax. By making the larger contractors deduct a percentage at source (typically 20%, so less than income is likely to be) it protects the public revenue.

So far, so good.

Every month we submit a return to HMRC saying who’s tax we have collected and HMRC take the money from our bank via direct debit.

The dates are based on ‘cash accounting’ which means the tax is ‘taken’ on the day we pay them. It ignores their invoice date, so if they bill on 30th Jan and we pay on the 10th Feb the tax is reported as February.

This is different to VAT, where the tax applies on the date the invoice is raised (the tax point).

If we make a mistake, eg: I deducted CIS from a roofing contractor when I shouldn’t have because it was a gutter cleaning job which is outside of the scope of CIS taxes, then I must promptly correct my submission.

I cannot submit a negative number on my next return (the HMRC system doesn’t work that way). Instead I must resubmit a corrected return. There is no penalty for a resubmission.

Typically to correct accounts we would make a ‘credit note’ to reverse the transaction and the create a new invoice. However Credit notes aren’t applied to past CIS returns. Xero does some strange amortisation in the background too (I once had an issue when materials and labour were switched and the correction to me hours to figure out because what Xero showed on my screen was not the same as what it did behind the scenes when creating the report).

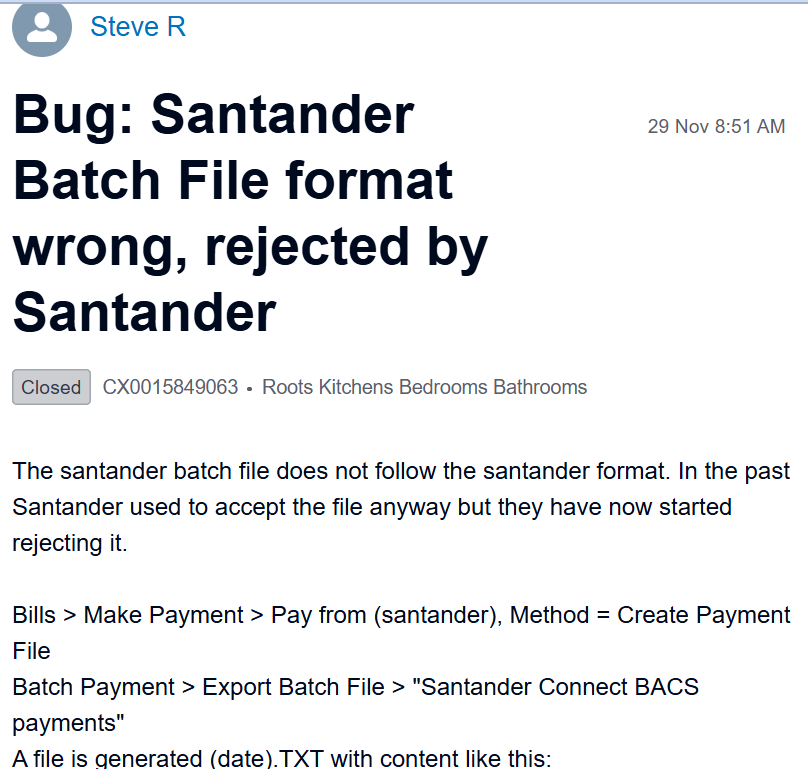

Xero’s first answer is that I could not make the correction in Xero and would need to find another way of reporting the change but HMRC said they require all commercial software to be able to resubmit returns. HMRC were very helpful on the phone and the advisor suggested I could use them manual web page submission system and that would replace our Xero submitted one…. but I figured if HMRC required the software to do it, it could and I just needed to figure out how.

I thought around the problem and asked Xero what would happen if I voided the original invoice? I assumed that meant it would no longer appear to be included in the past CIS submission, which would change the numbers and I could resubmit the correction. I was worried because of my past experience of Xero hiding things but they confirmed it would indeed allow me to resubmit a corrected return.

For me, I’d paid when I shouldn’t have so I just reallocated payment to the new non-CIS invoice I created.

Remember that CIS is ‘cash accounting’ so if you are allocating or part paying some CIS in a corrected return you need to be careful to ensure you are paying on the correct date (the past, as it were). Putting todays date in as today is the day you are correcting something will probably not give the answer you expect.

I hope this helps someone!